research

Brief Descriptions of my current and past projects

I am very grateful to be advised by Prof. Swabha Swayamdipta and to be collaborating with students in the USC NLP Department and USC Dworak-Peck School of Social Work!

High Level Motivation

My research interests lie in investigating how langauge models can help us understand social issues by exploring collaborative settings between humans and generative models, and investigating applications of language models for social good. To this end, I feel excited about quantifying the success and failure modes of models in reasoning about societal issues, and developing scalable computational methods to make sense of data around issues that our society faces today.

What I am currently working on ?

Extracting the Social and Relational Dynamics in Suicide Reports

At the time of a victim’s passing, officials generate reports detailing the victim’s interactions with other individuals and their ongoing struggles. Whereas existing literature has a heavy emphasis on the design of mental health interventions, the relational dynamics between victims and non-clinical professionals (i.e. attorneys and lawyers) is relatively less explored. Identifying and characterizing these interactions can be challenging, as they are often obscured by indirect references to legal professionals rather than explicit mentions. Furthermore, manually reading reports is an emotionally taxing job for domain experts.

In this project, we aim to:

- Characterize the relational dynamics between victims and non clinical professionals in suicide reports with the help of LLMs as assistants

- Develop a methodology to extract variables of interest from suicide reports using LLMs via exploring strategies to convert unstructured text to tables

- Provide domain experts with insights from our findings to assist them in developing improved interventions for suicide prevention

Past Work and Projects

Characterizing Attitudes Towards Homelessness on Social Media

Discourse on social media about homelessness elicits a diverse spectrum of attitudes that are confounded with complex socio-political factors making it extremely challenging to characterize attitudes towards homelessness on social media at scale. Furthermore, even toxicity classifiers and LLMs fail at capturing its nuances which motivates the need for structured pragmatic frames for a more comprehensive understanding of this discourse.

In this project, we aim to:

- Evaluate the effectiveness of language models at reasoning about attitudes towards homelessness by developing a codebook grounded in framing theory from sociology (Goffman, 1974)

- Finetune models on an expert annotated dataset to infer our frames developed from our codebook

- Conduct analyses with respect to socio-political dimensions to characterize how attitudes differ across regionality.

We also want to explore the effectiveness of LLMs in reasoning about complex discourse on social media on sensitive social topics where we evaluate the use of GPT3.5 to act as an annotator in the loop in labeling a dataset on the topic of homelessness. We find that even approaches that combine Chain-of-Thought Prompting with GPT3.5 are only useful in predicting broad, coarse themes around this discourse. Thus, we utilize human validation in our annotation pipeline to further highlight finer-grained nuances.

Furthermore, in our data, our structured frames highlight themes unique to our domain where the most salient theme is oriented around using homelessness as a vehicle issue in critiquing government structures.

The application of LLMs in domains of social science is still widely unexplored due to the socio-political complexities of issues such as homelessness. Prior works on attitudes towards homelessness are grounded in qualitative surveys and ethnographic studies done on a small scale. We motivate the necessity of structured pragmatic frames in comprehensively understanding these issues and further exploring the use of LLMs to understand public discourse.

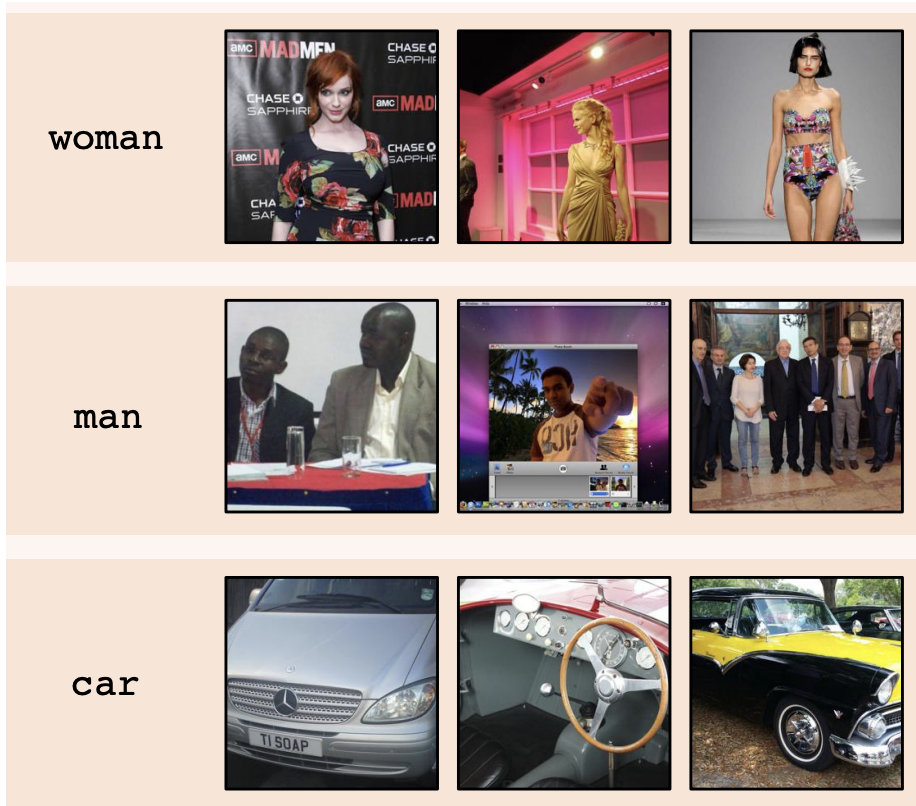

Variation of Gender Biases in Visual Recognition Models Before and After Finetuning

In collaboration with Tianlu Wang, Baishakhi Ray, and Vicente Ordonez, we introduce a framework to measure how biases change before and after fine-tuning a large scale visual recognition model for a downstream task.

Many computer vision systems today rely on models typically pretrained on large scale datasets. While bias mitigation techniques have been developed for tuning models for downstream tasks, it is currently unclear what are the effects of biases already encoded in a pretrained model. Our framework incorporates sets of canonical images representing individual and pairs of concepts to highlight changes in biases for an array of off-the-shelf pretrained models across model sizes, dataset sizes, and training objectives.

Through our analyses, we find that:

- Supervised models trained on datasets such as ImageNet-21k are more likely to retain their pretraining biases regardless of the target dataset compared to self-supervised models.

- Models finetuned on larger scale datasets are more likely to introduce new biased associations. Our results also suggest that

- Biases can transfer to finetuned models and the finetuning objective and dataset can impact the extent of transferred biases.

Our work was recently accepted at the Workshop on Algorithmic Fairness through the Lens of Time at NeuRIPS 2023. New Orleans, LA.

2023

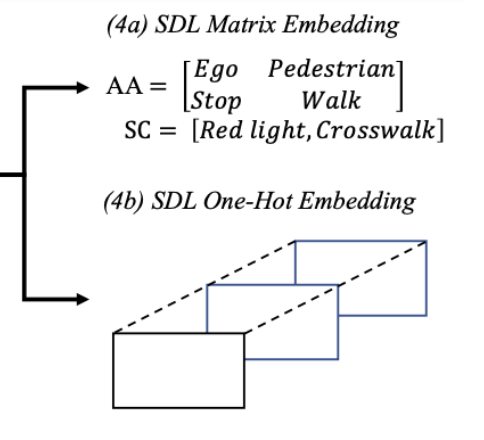

Scenario2Vector: scenario description language based embeddings for traffic situations

In collaboration with Aron Harder and Madhur Behl, we propose Scenario2Vector - a Scenario Description Language (SDL) based embedding for traffic situations that allows us to automatically search for similar traffic situations from large AV data-sets. Our SDL embedding distills a traffic situation experienced by an AV into its canonical components - actors, actions, and the traffic scene. We can then use this embedding to evaluate similarity of different traffic situations in vector space.

Safety assessments for automated vehicles need to evolve beyond the existing voluntary self-reporting. There is no comprehensive measuring stick that can compare how far each AV developer is in terms of safety. Our goal in this research is to answer the following question: How can we fairly compare two different AV implementations? In doing so, the aim of this work is to make progress towards an innovative certification method allowing for a fair comparison between AVs by comparing them on similar traffic situations.

The goal of our research is to provide a common metric that will facilitate the comparison of different autonomous vehicle algorithms. In order to compare the different AVs, we need to observe them under similar traffic conditions or scenarios. Our goal therefore is to find similar traffic scenarios from the datasets generated by different AVs. Having found similar traffic situations, we can then observe if the output of one AV is more safe/optimal compared to another. To this end, we also present a first of its kind -Traffic Scenario Similarity (TSS) dataset. This dataset contains 100 traffic video samples (scenarios) and for each sample, it contains 6 candidate scenario videos ranked by human participants based on its similarity to the baseline sample.

Our work was accepted in the Proceedings of the ACM/IEEE 12th International Conference on Cyber-Physical Systems at ICCPS 2021. Nashville, TN.