Uncovering Intervention Opportunities for Suicide Prevention with Language Model Assistants

Jaspreet Ranjit, Hyundong J. Cho, Claire J. Smerdon, and

5 more authors

In EAAMO’25, GenAI4Health NeurIPS’25, 2025

Runner up for best doctoral oral presentatino at ShowCAIS 2025

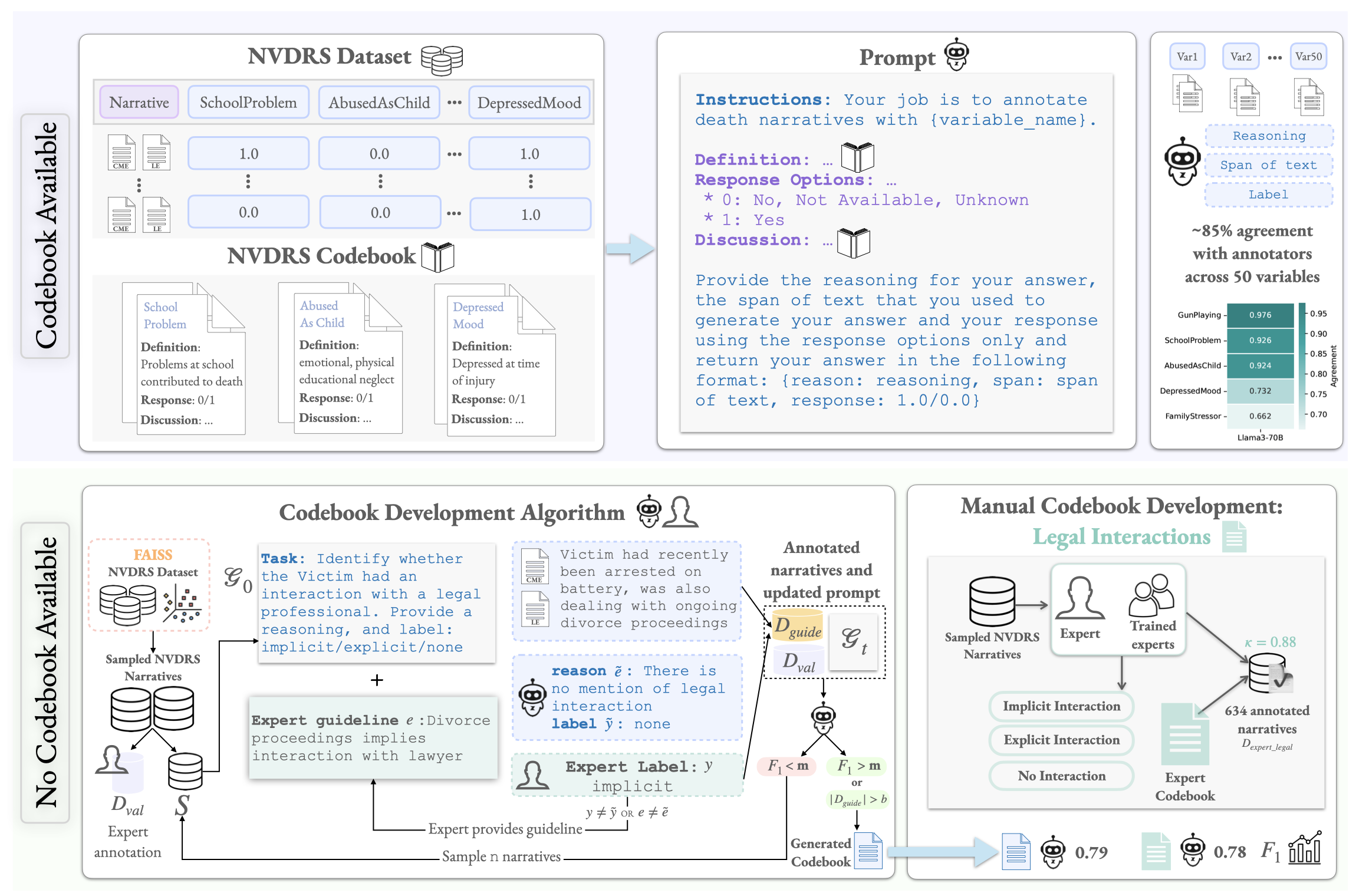

The National Violent Death Reporting System (NVDRS) documents in-

formation about suicides in the United States, including free text narra-

tives (e.g., circumstances surrounding a suicide). In a demanding public

health data pipeline, annotators manually extract structured information

from death investigation records following extensive guidelines developed

painstakingly by experts. In this work, we facilitate data-driven insights

from the NVDRS data to support the development of novel suicide inter-

ventions by investigating the value of language models (LMs) as efficient

assistants to these (a) data annotators and (b) experts. We find that LM

predictions match existing data annotations about 85% of the time across

50 NVDRS variables. In the cases where the LM disagrees with existing an-

notations, expert review reveals that LM assistants can surface annotation

discrepancies 38% of the time. Finally, we introduce a human-in-the-loop

algorithm to assist experts in efficiently building and refining guidelines for

annotating new variables by allowing them to focus only on providing feed-

back for incorrect LM predictions. We apply our algorithm to a real-world

case study for a new variable that characterizes victim interactions with

lawyers and demonstrate that it achieves comparable annotation quality

with a laborious manual approach. Our findings provide evidence that LMs

can serve as effective assistants to public health researchers who handle

sensitive data in high-stakes scenarios.

Uncovering Intervention Opportunities for Suicide Prevention with Language Model AssistantsIn EAAMO’25, GenAI4Health NeurIPS’25, 2025

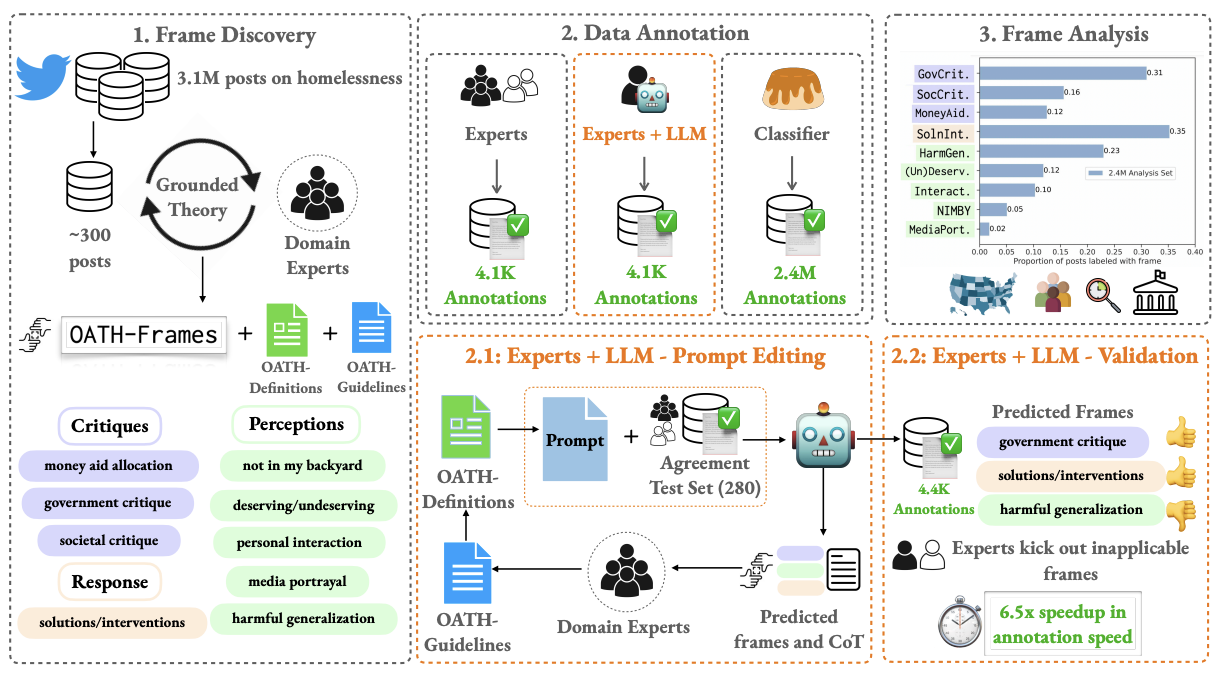

Uncovering Intervention Opportunities for Suicide Prevention with Language Model AssistantsIn EAAMO’25, GenAI4Health NeurIPS’25, 2025 OATH-Frames: Characterizing Online Attitudes Towards Homelessness via LLM AssistantsIn Proceedings of EMNLP, 2024

OATH-Frames: Characterizing Online Attitudes Towards Homelessness via LLM AssistantsIn Proceedings of EMNLP, 2024 Anchorless object detection for 3D point cloud object detectionExpedition Technology Blog, 2019

Anchorless object detection for 3D point cloud object detectionExpedition Technology Blog, 2019